A Remainder for 3D Graphics in Gaming

The video graphics has three basic steps: Vertex Shading, Rasterization and Fragment Shading. There are also additional steps in many modern video games, these three core steps have been used for decades in millions of video games for both consoles and computers. These are essential of video game graphics algorithm pretty much every game you play.

Lets begin with first step Vertex Shading: the basic idea in this step is to take all the objects' meshes and geometries in a third dimensional space and use the field of view of the camera to calculate where each object stands in a two dimensional window called the viewscreen. The image that is sent on the display. When you see all different graphic models on the display, the player only sees sections of that specific field of view, reducing the number of objects need to be rendered. Conceptualy the entire model is moved as one piece on to the viewscreen, but actually each of the models of hundreds of thousands of vertices are moved one at a time.

So lets focus on a single vertex. The process of moving a vertex and by extension triangles and the models, from a 3D world on to a 2D display screen is done by three main transformations; First moving a vertex from model space to world space, then from world space to camera space and finally from a perspective field of view onto the view screen. To perform this transformation, softwares use X, Y, Z coordinates of that vertex in modeling space; then scale, position, rotation of the model in world space, and finaly the rotation coordinates of the camera and its field of view. Graphical softwares attach all these numbers into transformation matrices and multiplying together as resulting in X and Y coordinates values on the vertex on the view screen. The depth value of Z coordinate will use later to determine object blocking. After three vertices of the model transformed using similar matrix math; the 2D viewscreen will show a single triangle moving. The remaining vertices in the camera field of view undergo a series set of transformation, calculating an incredible amount of matrix math. Video game consoles and GPUs in graphics cards are designed to maintain constantly, a triangle mesh rendering every few miliseconds.

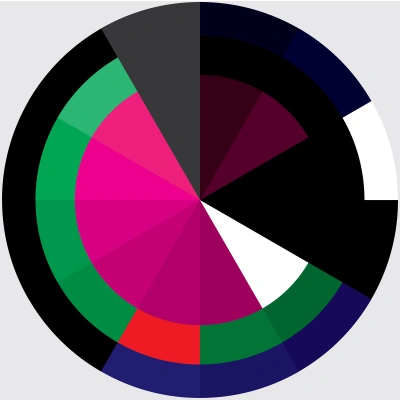

All the vertices which are moved to a 2D plane was the first step. A single triangle consists of three vertices. Graphics software needs to figure out which specific pixels on your display are rendered by that triangle. This is the process called Rasterization in graphic design. A 8K monitor or a simple home tv has a resolution of many pixels. Using the X and Y coordinates of vertices of a given triangle of a viewscreen, GPUs calculates, where it stands in that massive grid and which of the pixels are covered by that particular triangle. Next, those pixels are shaded using the texture or colour assigned to that specific triangle. Furthermore with rasterization, we turn triangles to pieces; which are groups that come from same triangle or share the same colour or texture. Then the software will move to next triangle and shade the pixels that covered by it and continue to do this complex process for the remaining triangles on the viewscreeen. By applying Red, Green and Blue colour values of each triangle of apropriate pixels, a 8K image is formed in the frame buffer and sent to the display. Regarding the other triangles which are blocked or overlapped by other triangles that are sent to rendering pipeline are invisible on the display, obviously they do not appear on the final image. Determining which triangles are shown is a called a Visibility problem and is solved by using Z buffer or Depth buffer.

The final image has all the pixels corresponding to each small group of triangles. Painting the pixels with colours by number will not be enough. In order to make the scene more realistic, softwares also need to account the direction and strenght of the light or illumination, the position of the camera, reflections and shadows cast by other models in the model space. Fragment Shading is therefore used to shade in each pixel to accurate illumination to make the scene real as possible. The basic idea is that; if a surface is pointing directly to a light source such as sun, is shaded brighter, whereas if a surface is facing perpendacular to, or away from the light, it is shaded darker. In order to calculate triangle's shading, there are two important attributes. First the direction of the light and second the direction the triangle's surface is facing.

The direction of each specific triangle which is facing is called its surface normal, which is the perpendicular to the plane of triangle. Surface normal is used to measure the effects of light to calculate the correct shaded colour for that specific triangle. This process adjusts the triangles' RGB values and as a result, we get a range of lightness to darkness of a model surface depending how its individual triangles are facing the light.

The vector and matrix math used in rendering in video game graphics is rather complicated but each passing year the computer technology and softwares keep improving. There is no way of telling how much calculation and rendering, can be applied to achieve the same realism like the visual perception of a human.